Variance Of Least Squares Estimators Matrix Form Youtube

Variance Of Least Squares Estimators Matrix Form Youtube This video derives the variance of least squares estimators under the assumptions of no serial correlation and homoscedastic errors. check out ben la. This video follows from the previous one covering the assumptions of the linear regression model in the matrix formulation to derive and show the properties.

A Graduate Course In Econometrics Lecture 14 Variance Of Least Hat matrix – puts hat on y. we can also directly express the fitted values in terms of only the x and y matrices. and we can further define h, the “hat matrix”. • the hat matrix plans an important role in diagnostics for regression analysis. write h on board. So given that the least squares estimator of $\beta$ is: $$ \mathbf{\hat{\beta}} = (\mathbf{x}^t \mathbf{x})^{ 1}\mathbf{x}^t \mathbf{y} $$ and $\mathbf{y} = \mathbf{x} \mathbf{\beta} \epsilon$, where $\epsilon$ is a vector of independent zero mean normals all with the same variance $\sigma^2$. what is the covariance matrix?. The variance covariance matrix of the least squares parameter estimates is easily derived from (3.6) and is given by $$ var(\hat{\beta}) = (x^tx)^{ 1}\sigma^2. \tag{3.8} $$ typically one estimates the variance $\sigma^2$ by $$ \hat{\sigma}^2 = \frac{1}{n p 1}\sum {i=1}^n(y i \hat{y} i)^2. $$. Unfortunately, there is generally no simple formula for the variance of a quadratic form, unless the random vector is gaussian. if z ˘n( 😉 then var( ztcz) = 2tr(c c) 4 tc c . 2 least squares in matrix form our data consists of npaired observations of the predictor variable xand the response variable y, i.e., (x 1;y 1);:::(x n;y n). we wish.

Statistical Properties Of Least Squares Estimators Youtube The variance covariance matrix of the least squares parameter estimates is easily derived from (3.6) and is given by $$ var(\hat{\beta}) = (x^tx)^{ 1}\sigma^2. \tag{3.8} $$ typically one estimates the variance $\sigma^2$ by $$ \hat{\sigma}^2 = \frac{1}{n p 1}\sum {i=1}^n(y i \hat{y} i)^2. $$. Unfortunately, there is generally no simple formula for the variance of a quadratic form, unless the random vector is gaussian. if z ˘n( 😉 then var( ztcz) = 2tr(c c) 4 tc c . 2 least squares in matrix form our data consists of npaired observations of the predictor variable xand the response variable y, i.e., (x 1;y 1);:::(x n;y n). we wish. These notes will not remind you of how matrix algebra works. however, they will review some results about calculus with matrices, and about expectations and variances with vectors and matrices. throughout, bold faced letters will denote matrices, as a as opposed to a scalar a. 1 least squares in matrix form. This video derives the variance of least squares estimators under the assumptions of no serial correlation and homoscedastic errors.if you are interested in.

Least Square Estimators Variance Of Estimators Using Matrices Youtube These notes will not remind you of how matrix algebra works. however, they will review some results about calculus with matrices, and about expectations and variances with vectors and matrices. throughout, bold faced letters will denote matrices, as a as opposed to a scalar a. 1 least squares in matrix form. This video derives the variance of least squares estimators under the assumptions of no serial correlation and homoscedastic errors.if you are interested in.

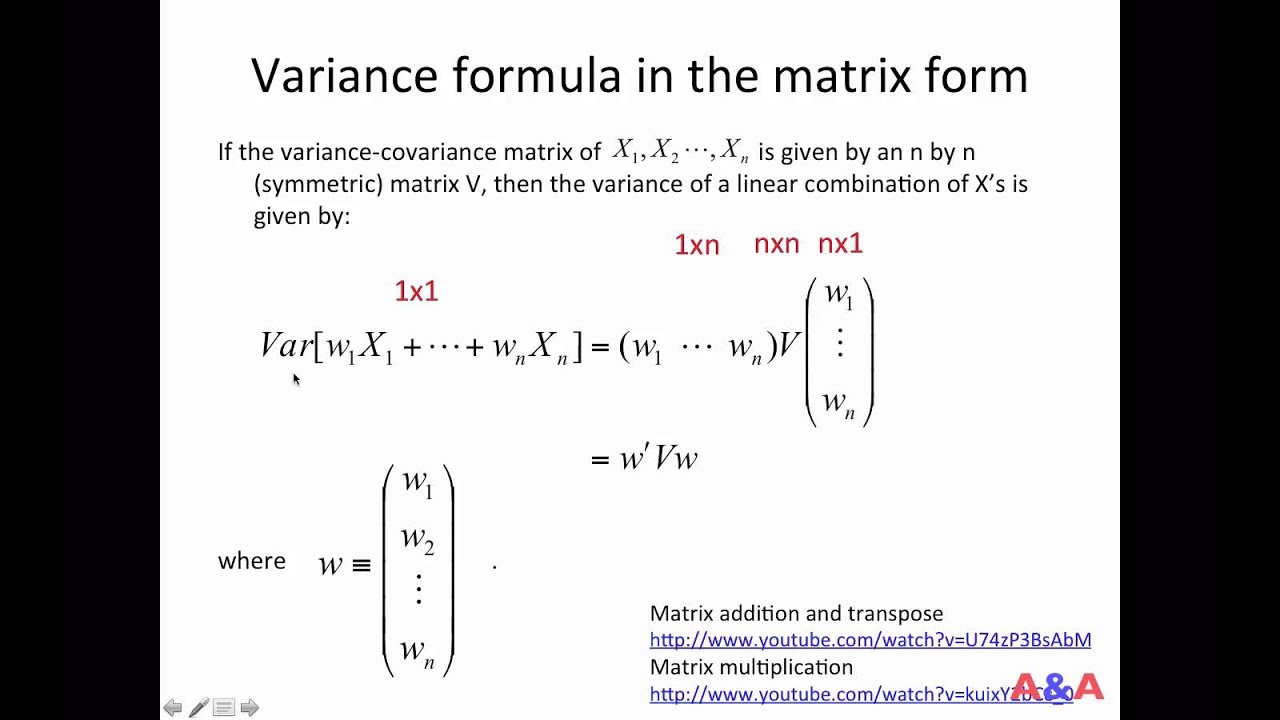

Variance Formula In The Matrix Form Youtube

Comments are closed.