Using Gpu With Docker On Kubernetes Hydrovlero

Using Gpu With Docker On Kubernetes Hydrovlero Install the nvidia gpu operator using helm. the last piece of the puzzle, we need to let kubernetes know that we have nodes with gpu’s on ’em. the nviida gpu operator creates configures manages gpus atop kubernetes and is installed with via helm chart. install helm following the official instructions. Installation script for enabling nvidia docker options. medium . to verify if you have nvidia docker enabled. # run docker with gpu options. docker run gpus all nvidia cuda:10.0 base nvidia.

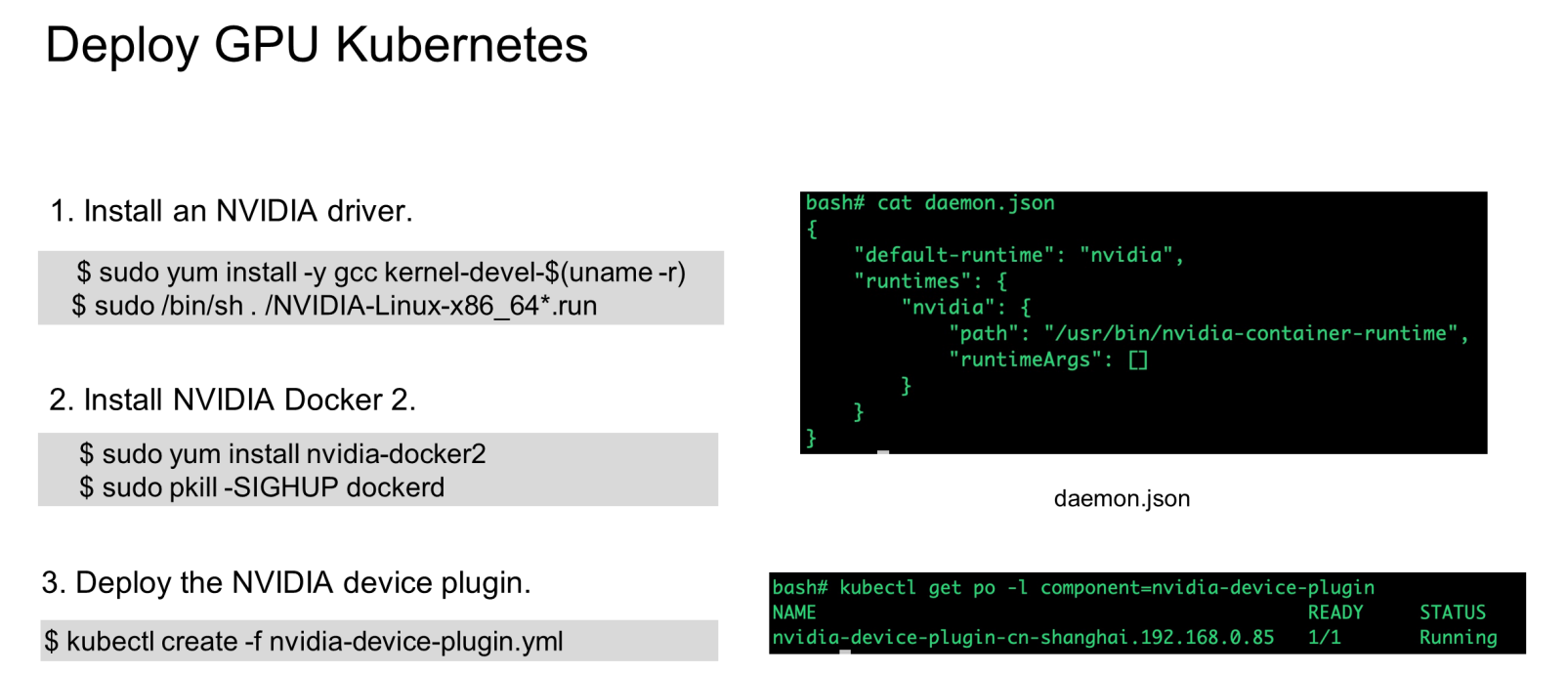

Using Gpu With Docker On Kubernetes Hydrovlero Let us install nvidia device plugin so that our kubernetes cluster can use gpus. easiest way is to use helm to install this plugin. if you don’t have helm, it is simple to install. go to your. Look at the contents of nvidia smi.yaml, specifically lines 6 through 8. lines 6 and 7 tell kubernetes what node the pod is allowed to run on and line 8 tells kubernetes to use the nvidia runtime instead of the default containerd. the nvidia runtime will automatically copy everything needed for your pod to use the gpu, you can check this like so:. To address the challenge of gpu utilization in kubernetes (k8s) clusters, nvidia offers multiple gpu concurrency and sharing mechanisms to suit a broad range of use cases. the latest addition is the new gpu time slicing apis, now broadly available in kubernetes with nvidia k8s device plugin 0.12.0 and the nvidia gpu operator 1.11. Now that you have a tested and working container image, you can create an airflow dag that utilizes the kubernetespodoperator to run tasks on a gcp kubernetes cluster with nvidia gpu support. use.

Using Gpu With Docker On Kubernetes Hydrovlero To address the challenge of gpu utilization in kubernetes (k8s) clusters, nvidia offers multiple gpu concurrency and sharing mechanisms to suit a broad range of use cases. the latest addition is the new gpu time slicing apis, now broadly available in kubernetes with nvidia k8s device plugin 0.12.0 and the nvidia gpu operator 1.11. Now that you have a tested and working container image, you can create an airflow dag that utilizes the kubernetespodoperator to run tasks on a gcp kubernetes cluster with nvidia gpu support. use. Prerequisites linux latest nvidia gpu drivers minikube v1.32.0 beta.0 or later (docker driver only) instructions per driver using the docker driver ensure you have an nvidia driver installed, you can check if one is installed by running nvidia smi, if one is not installed follow the nvidia driver installation guide check if bpf jit harden is set to 0 sudo sysctl net.core.bpf jit harden if it. Start a container and run the nvidia smi command to check your gpu's accessible. the output should match what you saw when using nvidia smi on your host. the cuda version could be different depending on the toolkit versions on your host and in your selected container image. docker run it gpus all nvidia cuda:11.4.0 base ubuntu20.04 nvidia smi.

Comments are closed.