Simple Linear Regression Derivation The Slope And Intercept In 2 Ways The Least Squares Criterion

Calculate Simple Linear Regression Equation Least Squares Peryplan I derive the least squares estimators of the slope and intercept in simple linear regression (using summation notation, and no matrices.) i assume that the. Simple linear regression derivation of the slope and intercept in 2 ways. the least squares criterion.

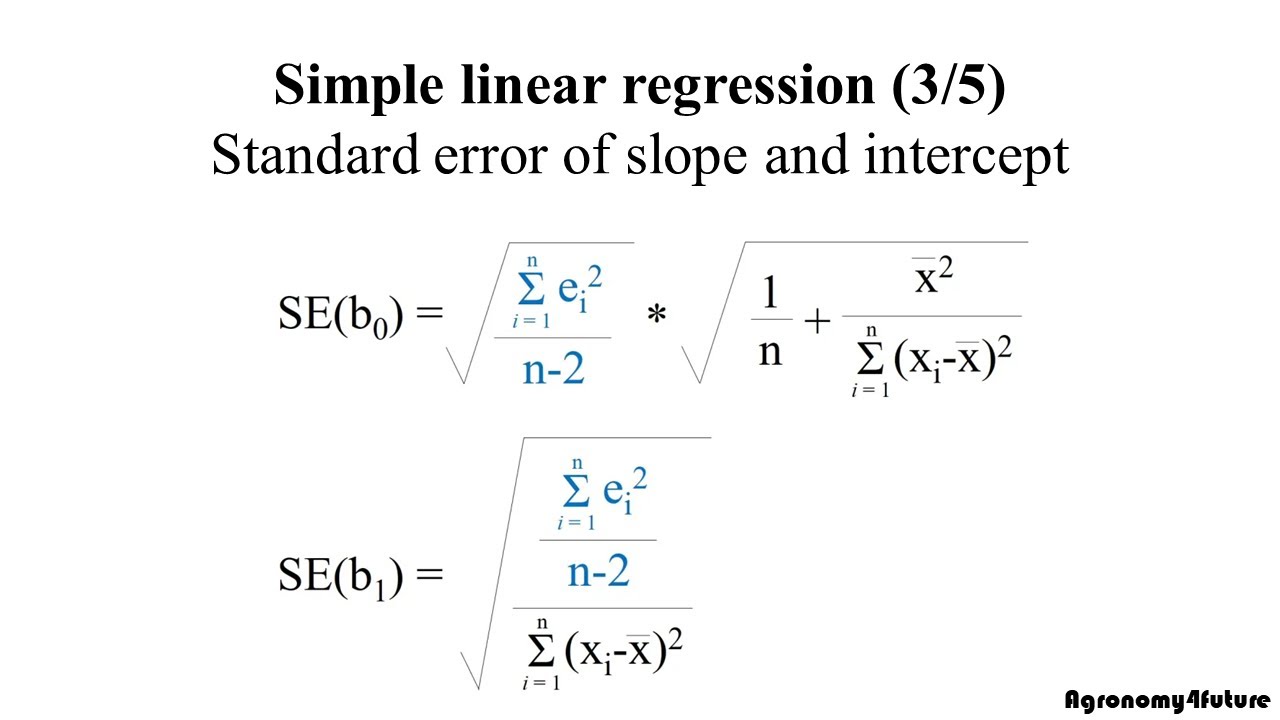

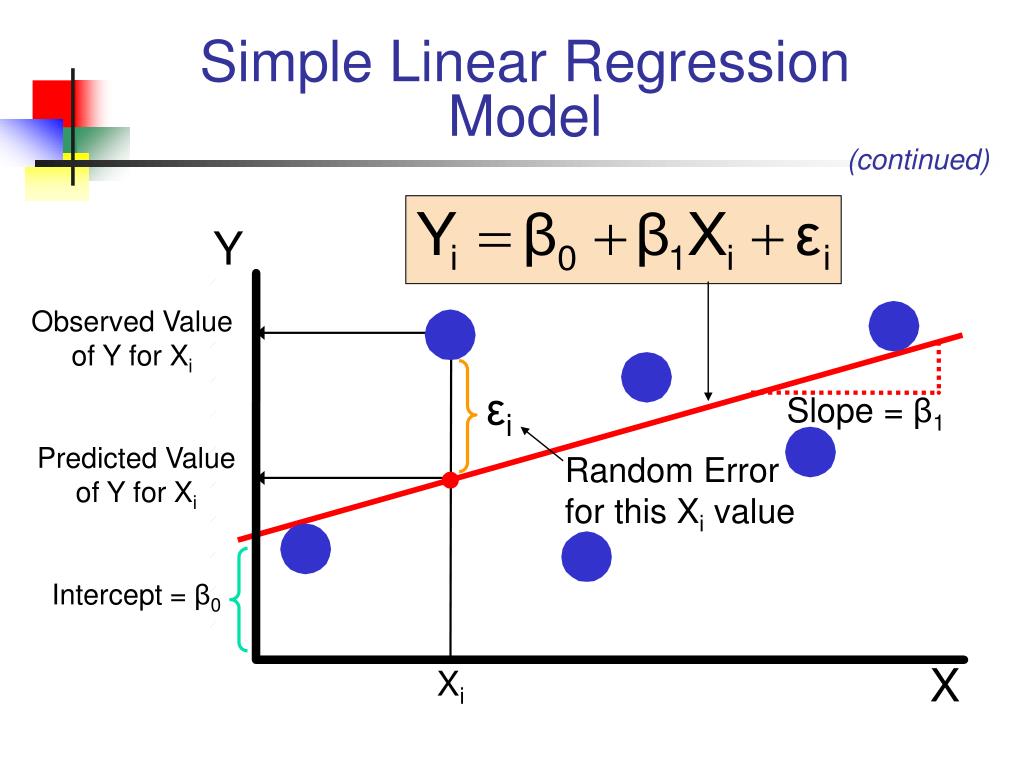

Simple Linear Regression 3 5 Standard Error Of Slope And Intercept 2.7 derivation for slope and intercept. this document contains the mathematical details for deriving the least squares estimates for slope (β1 β 1) and intercept (β0 β 0). we obtain the estimates, ^β1 β ^ 1 and ^β0 β ^ 0 by finding the values that minimize the sum of squared residuals (). ssr = n ∑ i=1[yi− ^yi]2 = [yi −(^β0. Simple linear regression least squares estimates of 0 and 1 simple linear regression involves the model y^ = yjx = 0 1x: this document derives the least squares estimates of 0 and 1. it is simply for your own information. you will not be held responsible for this derivation. the least squares estimates of 0 and 1 are: ^ 1 = ∑n i=1(xi x )(yi. Simple linear regression least squares (ls) estimation to estimate the regression coeffi cients β 0,β 1, here we adopt the least squares criterion: min βˆ 0,βˆ 1 s(βˆ 0,βˆ 1) def= xn i=1 (y i−(βˆ 0 βˆ 1x i | {z } yˆ i))2 the corresponding minimizers are called least squares estimators. remark. another way is to maximize the. Microsoft word 10.simple linear regression.doc. simple linear regression is the most commonly used technique for determining how one variable of interest (the response variable) is affected by changes in another variable (the explanatory variable). the terms "response" and "explanatory" mean the same thing as "dependent" and "independent.

Ppt Chapter 11 Simple Linear Regression Analysis 线性回归分析 Simple linear regression least squares (ls) estimation to estimate the regression coeffi cients β 0,β 1, here we adopt the least squares criterion: min βˆ 0,βˆ 1 s(βˆ 0,βˆ 1) def= xn i=1 (y i−(βˆ 0 βˆ 1x i | {z } yˆ i))2 the corresponding minimizers are called least squares estimators. remark. another way is to maximize the. Microsoft word 10.simple linear regression.doc. simple linear regression is the most commonly used technique for determining how one variable of interest (the response variable) is affected by changes in another variable (the explanatory variable). the terms "response" and "explanatory" mean the same thing as "dependent" and "independent. Give us a system of two linear equations in two unknowns. as we remember from linear algebra (or earlier), such systems have a unique solution, unless one of the equations of the system is redundant. (see exercise 2.) notice that this existence and uniqueness of a least squares estimate assumes absolutely nothing about the data generating process. 9.1. the model behind linear regression 217 0 2 4 6 8 10 0 5 10 15 x y figure 9.1: mnemonic for the simple regression model. than anova. if the truth is non linearity, regression will make inappropriate predictions, but at least regression will have a chance to detect the non linearity.

Comments are closed.