Scaling Redis Blog

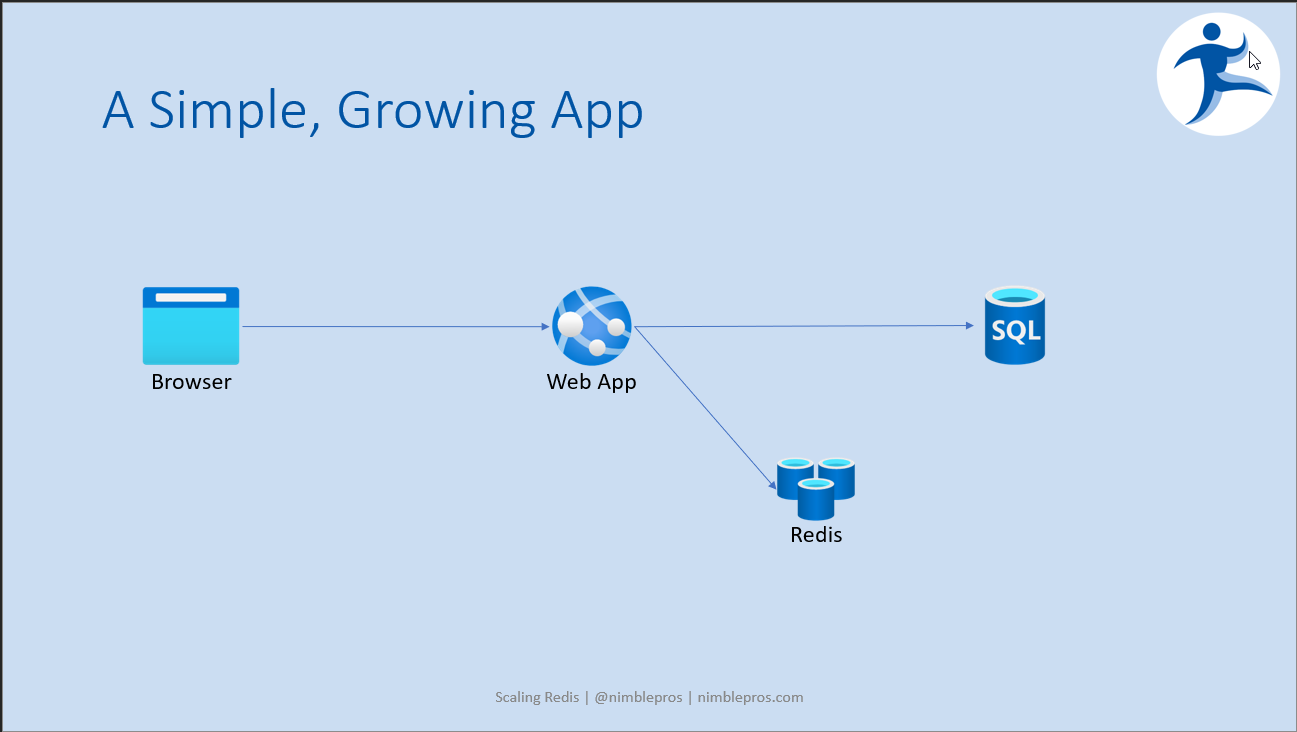

Scaling Redis Blog Scaling redis. date published: 14 november 2022. redis is a popular open source cache server. when you have a web application that reaches the point of needing more than one front end server, or which has a database that's under too much load, introducing a redis server between the application server and its database is a common approach. 1. vertical scaling. one of the most common misconceptions when scaling redis is the belief that vertical scaling —adding more cpu, memory, or other resources—will resolve performance issues. while this might work in other systems, redis' single threaded nature for handling in memory data operations limits the benefits of vertical scaling.

Scaling Redis Blog To connect to redis cluster, you'll need a cluster aware redis client. see the documentation for your client of choice to determine its cluster support. you can also test your redis cluster using the redis cli command line utility: $ redis cli c p 7000. redis 127.0.0.1:7000> set foo bar. To manage these diverse uses efficiently and to support dynamic scaling of our redis clusters, we developed a blue green deployment process using gcp managed instance groups (migs). this process. Scaling redis without clustering. this blog explores scaling redis, highlighting the complexities of redis cluster and presenting dragonfly as a simpler, efficient alternative. it offers insights into vertical scaling, operational ease, and dragonfly's ability to handle large scale workloads on a single node. oded poncz. Stay tuned for our next blog in this series of deep dives and let us know if you have any feedback by reaching out to us at cloud memorystore pm@google . with memorystore for redis cluster, we introduced true zero downtime scalability through a series of enhancements to the open source redis engine.

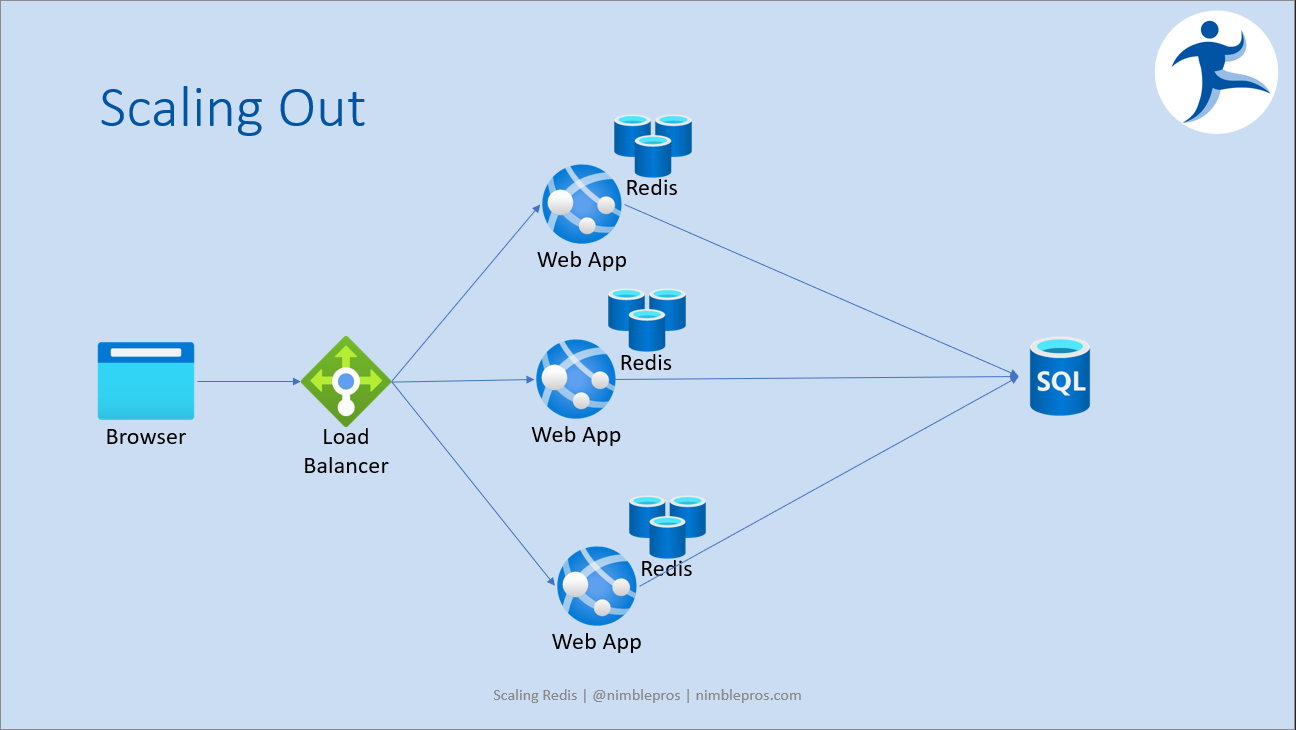

Linear Scaling With Redis Enterprise Redis Scaling redis without clustering. this blog explores scaling redis, highlighting the complexities of redis cluster and presenting dragonfly as a simpler, efficient alternative. it offers insights into vertical scaling, operational ease, and dragonfly's ability to handle large scale workloads on a single node. oded poncz. Stay tuned for our next blog in this series of deep dives and let us know if you have any feedback by reaching out to us at cloud memorystore pm@google . with memorystore for redis cluster, we introduced true zero downtime scalability through a series of enhancements to the open source redis engine. 4. scaling redis horizontally. horizontal scaling means “scaling out” or adding more servers to your redis clusters. while redis can run on a single node, instaclustr redis clusters always have a minimum of 3 primary (or master) nodes. we also recommend that for a production cluster you have a replication factor of 1. Redis is a popular open source in memory database that is widely used in modern applications. as your application grows, you may need to scale your redis database to handle increasing amounts of data and requests. in this article, we will explore the two techniques for scaling redis databases: sharding and partitioning. we will discuss the differences between them and provide guidance on how.

Comments are closed.