Openai Assistants Api W Retrieval Vs Custom Rag Which Is Better For

Openai Assistants Api W Retrieval Vs Custom Rag Which Is Better For The recent introduction of the openai assistants retrieval feature has sparked significant discussions in the ai community. this built in feature incorporates the retrieval augmented generation (rag) capabilities for question answering, allowing gpt language models to tap into additional knowledge to generate more accurate and relevant answers. In this video i share two applications for customer support automation built based on the retrieval augmented generation (rag) approach: github.

Openai Rag Vs Your Customized Rag Which One Is Better Zilliz Blog A tangent about rag. it’s awesome that openai has built tools that incorporate this functionality into assistants and custom gpts with minimal effort, but there are a lot of reasons why it might. Explain their process with getting the retrieval to the level it’s at in the assistants api. where they explain the process of developing a custom solution for a top tier european customer that gets openais developer attention, tuned specifically to the customer’s dataset. assistants is not that. rag needs intelligence applied to such a. The first thing that comes to mind, is to use rag with aa – that is, send your prompt to a vector store, retrieve the context, and send this context along with the question to the aa. this then becomes part of the prompt history. the good news is that the model will now retain the “context” of your discussion “thread” so far. Rag is the process of retrieving relevant contextual information from a data source and passing that information to a large language model alongside the user’s prompt. this information is used to improve the model’s output (generated text or images) by augmenting the model’s base knowledge. rag is valuable for use cases where the model.

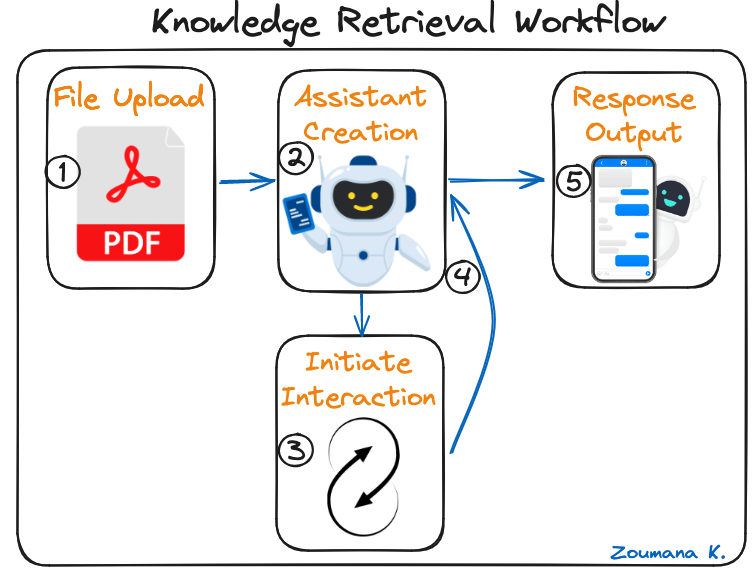

Openai Assistants Api Tutorial Datacamp The first thing that comes to mind, is to use rag with aa – that is, send your prompt to a vector store, retrieve the context, and send this context along with the question to the aa. this then becomes part of the prompt history. the good news is that the model will now retain the “context” of your discussion “thread” so far. Rag is the process of retrieving relevant contextual information from a data source and passing that information to a large language model alongside the user’s prompt. this information is used to improve the model’s output (generated text or images) by augmenting the model’s base knowledge. rag is valuable for use cases where the model. This is the dream. my dev work with ai is getting easier and better than ever. if all the promises are true, i’m ready to vendor lock in with openai. i dreamt about the day when developing rag is getting easier, especially at the scale speed and performance level. i cannot wait to try the new api with big documents full of tables. The assistants api automatically chooses between two retrieval techniques: it either passes the file content in the prompt for short documents, or. performs a vector search for longer documents. retrieval currently optimizes for quality by adding all relevant content to the context of model calls. we plan to introduce other retrieval strategies.

Openai Assistants Api A To Z Practitioner S Guide To Code Interpreter This is the dream. my dev work with ai is getting easier and better than ever. if all the promises are true, i’m ready to vendor lock in with openai. i dreamt about the day when developing rag is getting easier, especially at the scale speed and performance level. i cannot wait to try the new api with big documents full of tables. The assistants api automatically chooses between two retrieval techniques: it either passes the file content in the prompt for short documents, or. performs a vector search for longer documents. retrieval currently optimizes for quality by adding all relevant content to the context of model calls. we plan to introduce other retrieval strategies.

Pros And Cons Of Relying On The New Openai Assistants And Knowledge

Comments are closed.