Image Captioning Deep Learning Model Train Model Progressively On

Image Captioning Deep Learning Model Train Model Progressively On This video titled "image captioning deep learning model, train model progressively on less memory | coding part 6" explains the steps to train the image ca. Create image captioning models: overview. module 1 • 52 minutes to complete. this module teaches you how to create an image captioning model by using deep learning. you learn about the different components of an image captioning model, such as the encoder and decoder, and how to train and evaluate your model. by the end of this module, you.

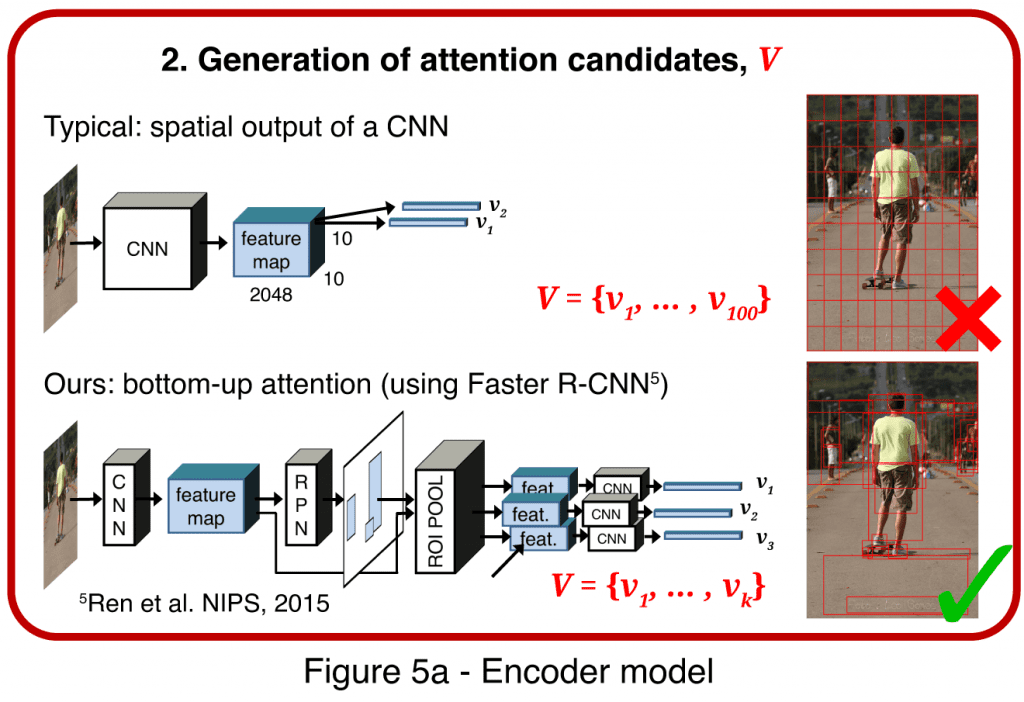

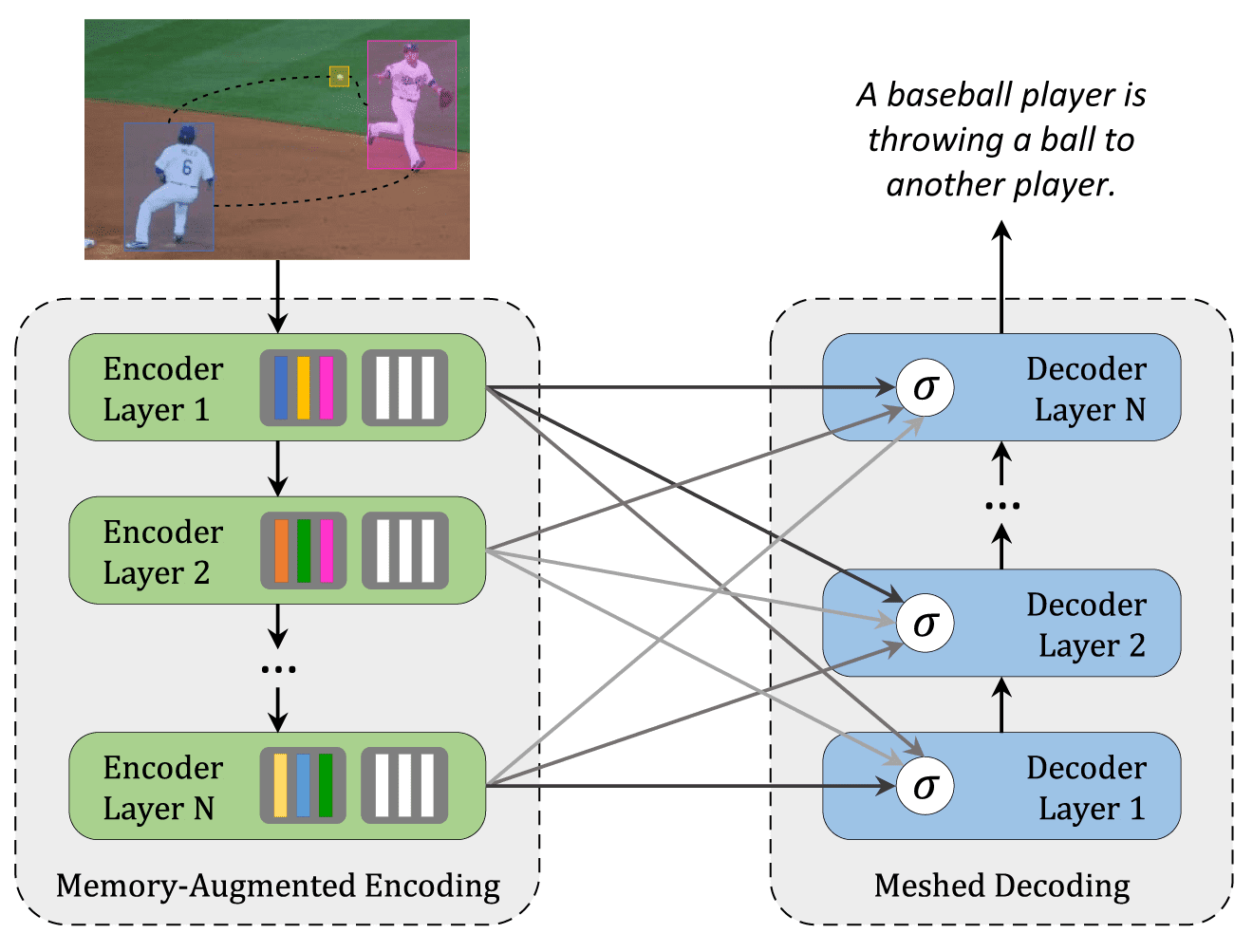

Block Diagram Of An Image Captioning Model Using Deep Learning Building the model. our image captioning architecture consists of three models: a cnn: used to extract the image features. a transformerencoder: the extracted image features are then passed to a transformer based encoder that generates a new representation of the inputs. Automatic photo captioning is a problem where a model must generate a human readable textual description given a photograph. it is a challenging problem in artificial intelligence that requires both image understanding from the field of computer vision as well as language generation from the field of natural language processing. it is now possible to develop […]. Image captioning. image captioning is the task of predicting a caption for a given image. common real world applications of it include aiding visually impaired people that can help them navigate through different situations. therefore, image captioning helps to improve content accessibility for people by describing images to them. Based on vit, wei liu et al. present an image captioning model (cptr) using an encoder decoder transformer . the source image is fed to the transformer encoder in sequence patches. hence, one can treat the image captioning problem as a machine translation task. figure 1: encoder decoder architecture.

Image Captioning And Tagging Using Deep Learning Models Image captioning. image captioning is the task of predicting a caption for a given image. common real world applications of it include aiding visually impaired people that can help them navigate through different situations. therefore, image captioning helps to improve content accessibility for people by describing images to them. Based on vit, wei liu et al. present an image captioning model (cptr) using an encoder decoder transformer . the source image is fed to the transformer encoder in sequence patches. hence, one can treat the image captioning problem as a machine translation task. figure 1: encoder decoder architecture. For ex path, ex captions in train raw.take(1): print(ex path) print(ex captions) image feature extractor. you will use an image model (pretrained on imagenet) to extract the features from each image. the model was trained as an image classifier, but setting include top=false returns the model without the final classification layer, so you can. To train and evaluate my image caption generator model, i split the flicker 30k dataset into three subsets: training set, validation set, and test set. to train a deep learning model, we need.

Image Captioning And Tagging Using Deep Learning Models For ex path, ex captions in train raw.take(1): print(ex path) print(ex captions) image feature extractor. you will use an image model (pretrained on imagenet) to extract the features from each image. the model was trained as an image classifier, but setting include top=false returns the model without the final classification layer, so you can. To train and evaluate my image caption generator model, i split the flicker 30k dataset into three subsets: training set, validation set, and test set. to train a deep learning model, we need.

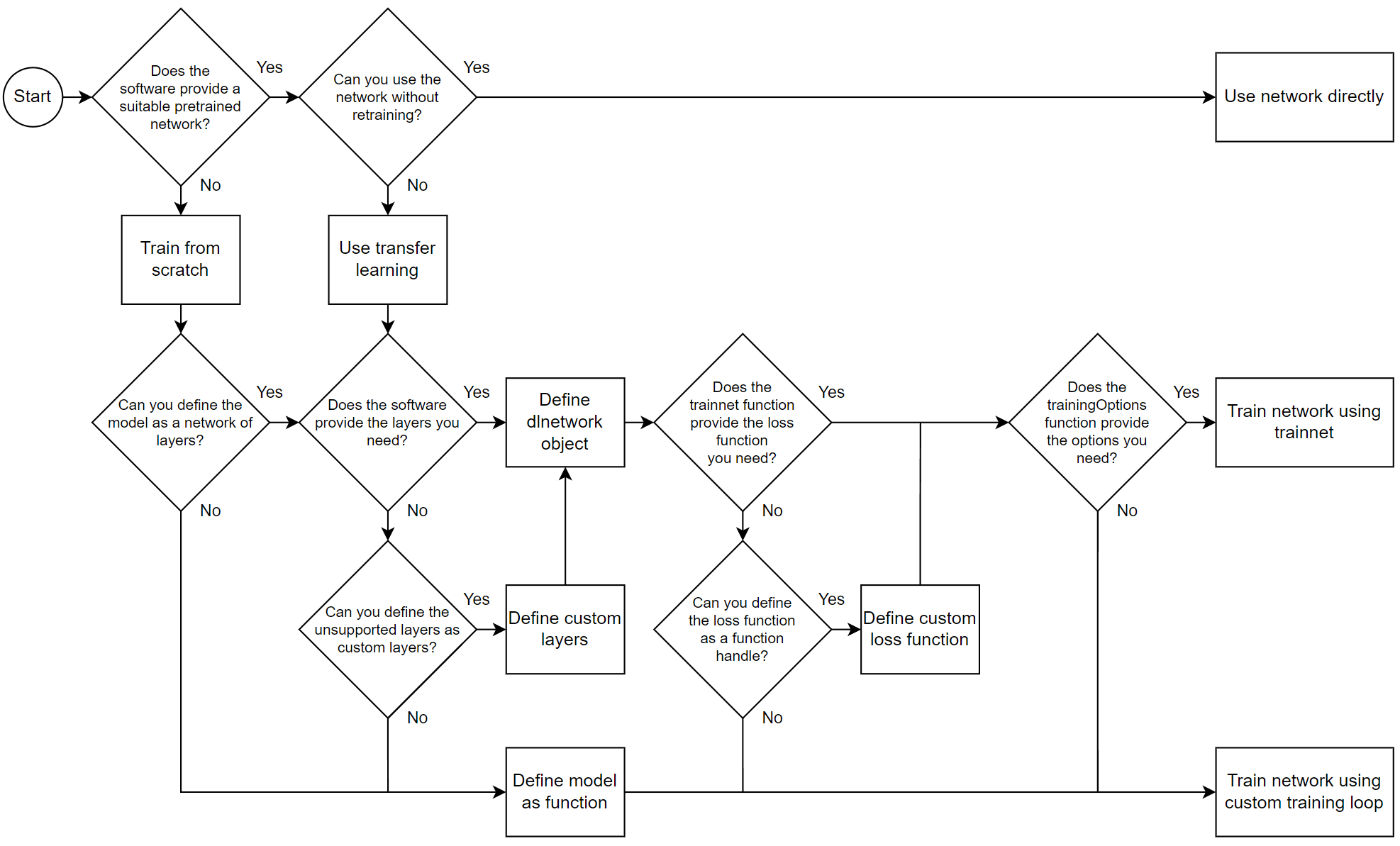

Train Deep Learning Model In Matlab Matlab Simulink Mathworks

Comments are closed.