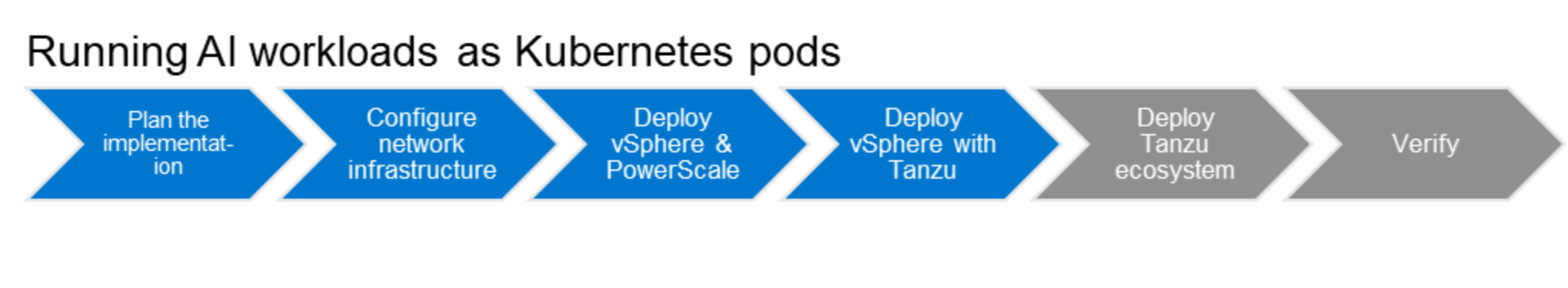

Deploy The Solution For Running Ai Workloads As Kubernetes Pods

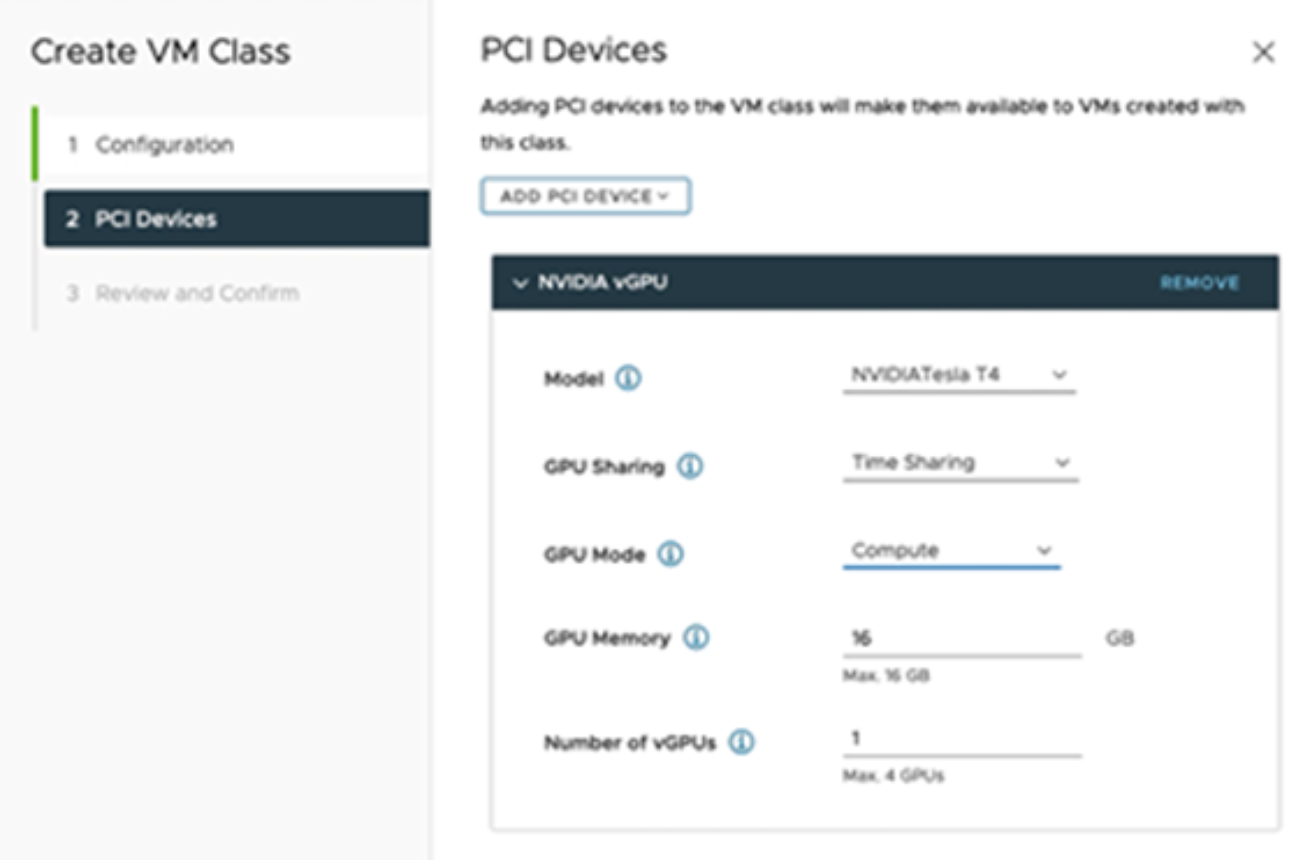

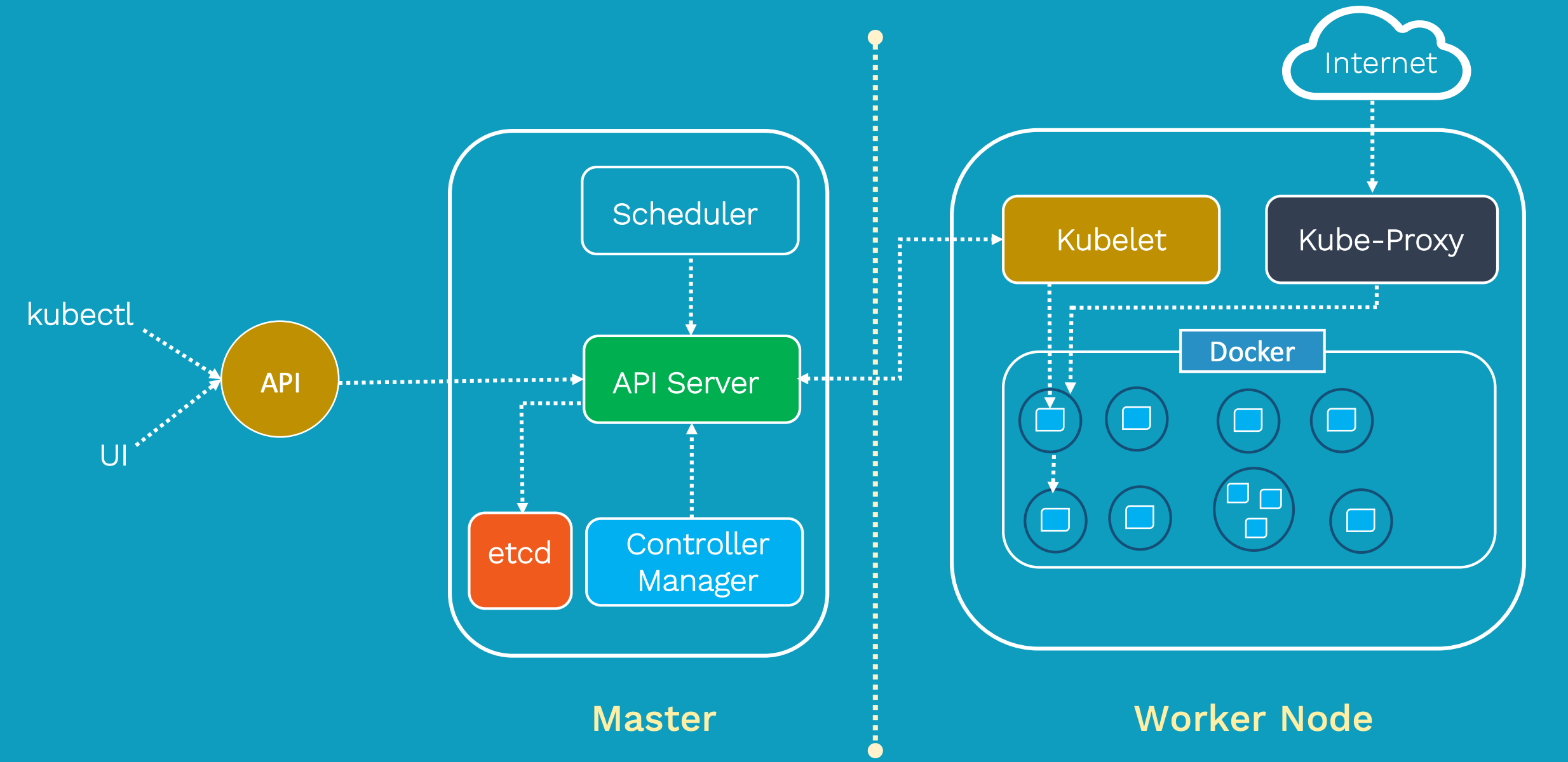

Deploy The Solution For Running Ai Workloads As Kubernetes Pods This section provides guidelines for deploying a tanzu kubernetes cluster for running ai workloads as kubernetes pods. overview. the following figure shows the software components of vmware vsphere with tanzu: figure 8. vmware vsphere with tanzu – software components. deploying nsx advanced load balancer. Deploying ai ml applications on kubernetes provides a robust solution for managing complex ai ml workloads. one of the primary benefits is scalability. kubernetes can automatically scale the infrastructure, accommodating varying workloads efficiently, ensuring that resources are allocated effectively based on demand.

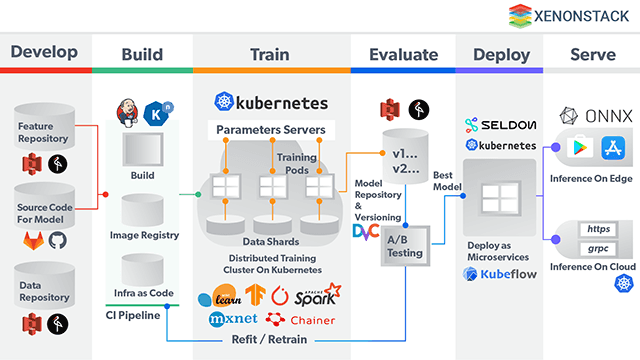

Deploy The Solution For Running Ai Workloads As Kubernetes Pods Amazon eks is a managed service for running kubernetes workloads on aws. we can use amazon eks to orchestrate nvidia nim (plural: nim microservices) pods across multiple nodes, because it automatically manages the availability and scalability of the kubernetes control plane nodes, which are responsible for scheduling containers, managing. Stateless applications can be deployed directly from the workloads menu in the google cloud console as well as by using the kubernetes api. you can learn how to deploy a stateless linux application on gke in deploying a stateless linux application. if you prefer, you can also learn how to deploy a stateless windows server application. Hpa, vpa, and cluster autoscaler each have a role. in summary, these are the three ways that kubernetes autoscaling works and benefits ai workloads: hpa scales ai model serving endpoints that need to handle varying request rates. vpa optimizes resource allocation for ai ml workloads and ensures each pod has enough resources for efficient. The popular way to deploy these workloads is kubernetes, and the kubeflow and kserve projects enable them there. recent innovations like the model registry, modelcars feature, and trustyai.

Deploy The Solution For Running Ai Workloads As Kubernetes Pods Hpa, vpa, and cluster autoscaler each have a role. in summary, these are the three ways that kubernetes autoscaling works and benefits ai workloads: hpa scales ai model serving endpoints that need to handle varying request rates. vpa optimizes resource allocation for ai ml workloads and ensures each pod has enough resources for efficient. The popular way to deploy these workloads is kubernetes, and the kubeflow and kserve projects enable them there. recent innovations like the model registry, modelcars feature, and trustyai. Generative ai technology involves tuning and deploying large language models (llm), and gives developers access to those models to execute prompts and conversations. platform teams who standardize on kubernetes can tune and deploy the llms on amazon elastic kubernetes service (amazon eks). this post walks you through an end to end stack and. Missing gang scheduling for scaling up parallel processing ai workloads to multiple distributed nodes, and topology awareness for optimizing performance. scale out vs. scale up architecture kubernetes was built as a hyperscale system with scale out architecture for running services. ai ml workloads require a different approach.

Kubernetes Deployment Template Example At Mary Morning Blog Generative ai technology involves tuning and deploying large language models (llm), and gives developers access to those models to execute prompts and conversations. platform teams who standardize on kubernetes can tune and deploy the llms on amazon elastic kubernetes service (amazon eks). this post walks you through an end to end stack and. Missing gang scheduling for scaling up parallel processing ai workloads to multiple distributed nodes, and topology awareness for optimizing performance. scale out vs. scale up architecture kubernetes was built as a hyperscale system with scale out architecture for running services. ai ml workloads require a different approach.

Building Enterprise Ai Platform On Kubernetes

Comments are closed.