Data Mesh Confluent

How To Build A Data Mesh Using Event Streams Data mesh: introduction, architectural basics, and implementation. data mesh is a data architecture framework designed to enhance data management and scalability within organizations. by distributing data ownership and capabilities across autonomous and self contained domains, data mesh optimizes the efficiency, agility, and scalability of data. Data mesh is a new approach for designing modern data architectures by embracing organizational constructs as well as data centric ones, data management, governance, etc. the idea is that data should be easily accessible and interconnected across the entire business. similar to the way that microservices are a set of principles for designing.

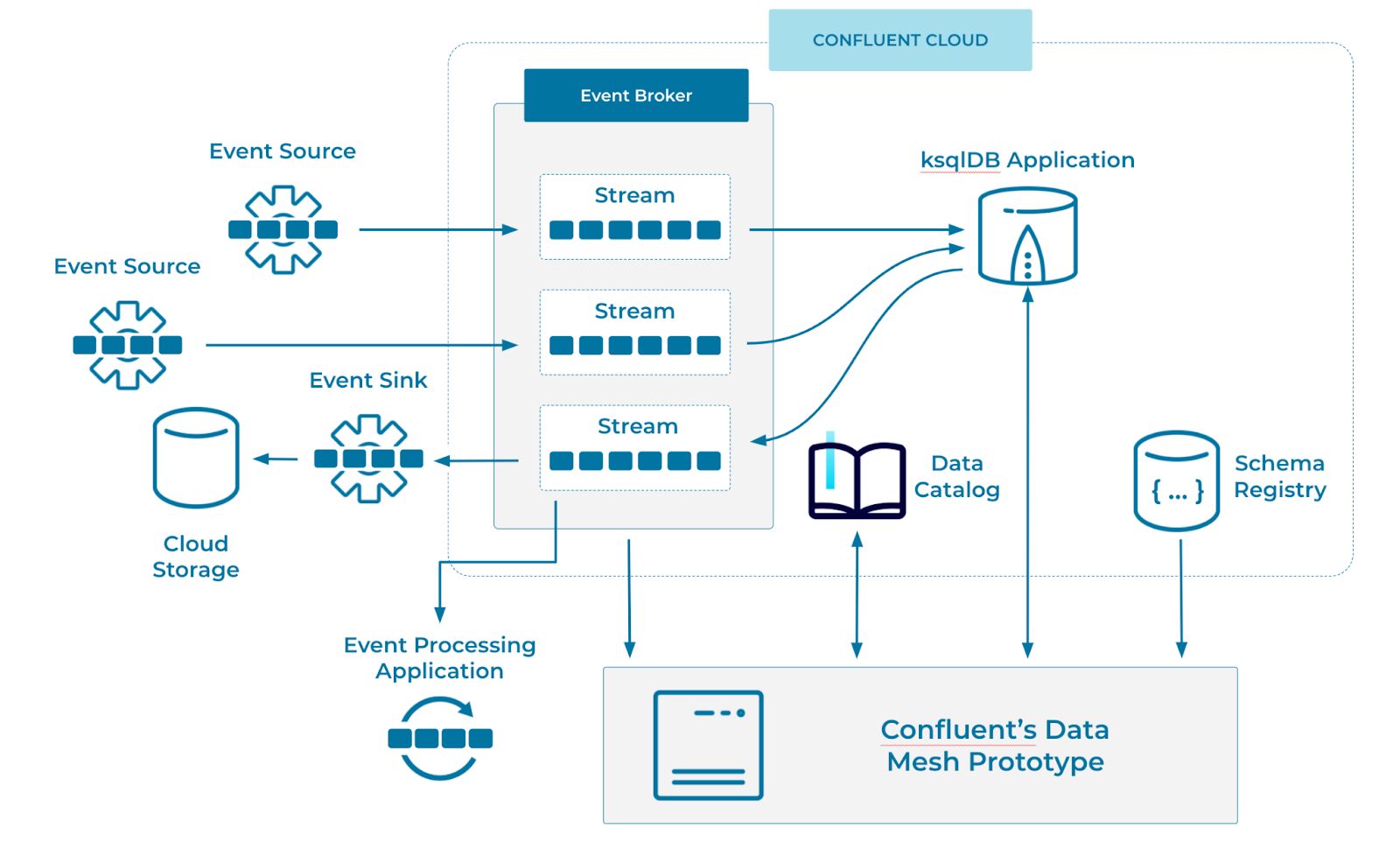

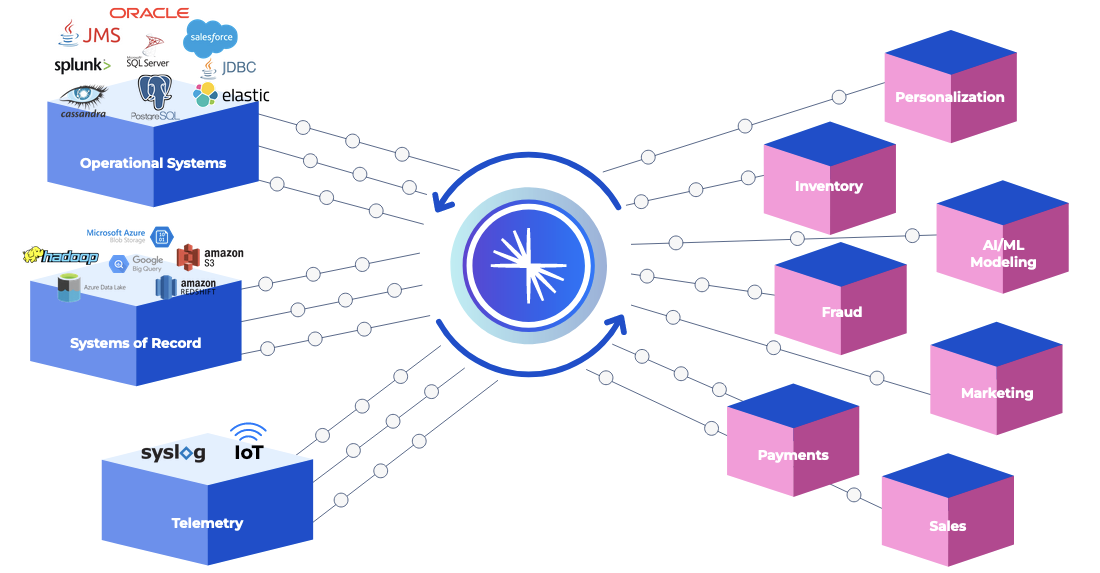

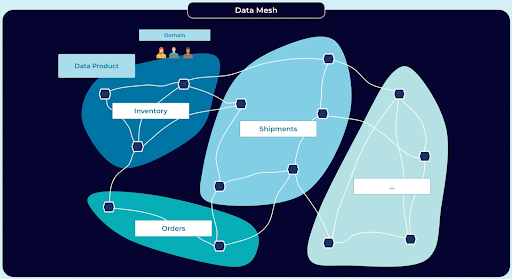

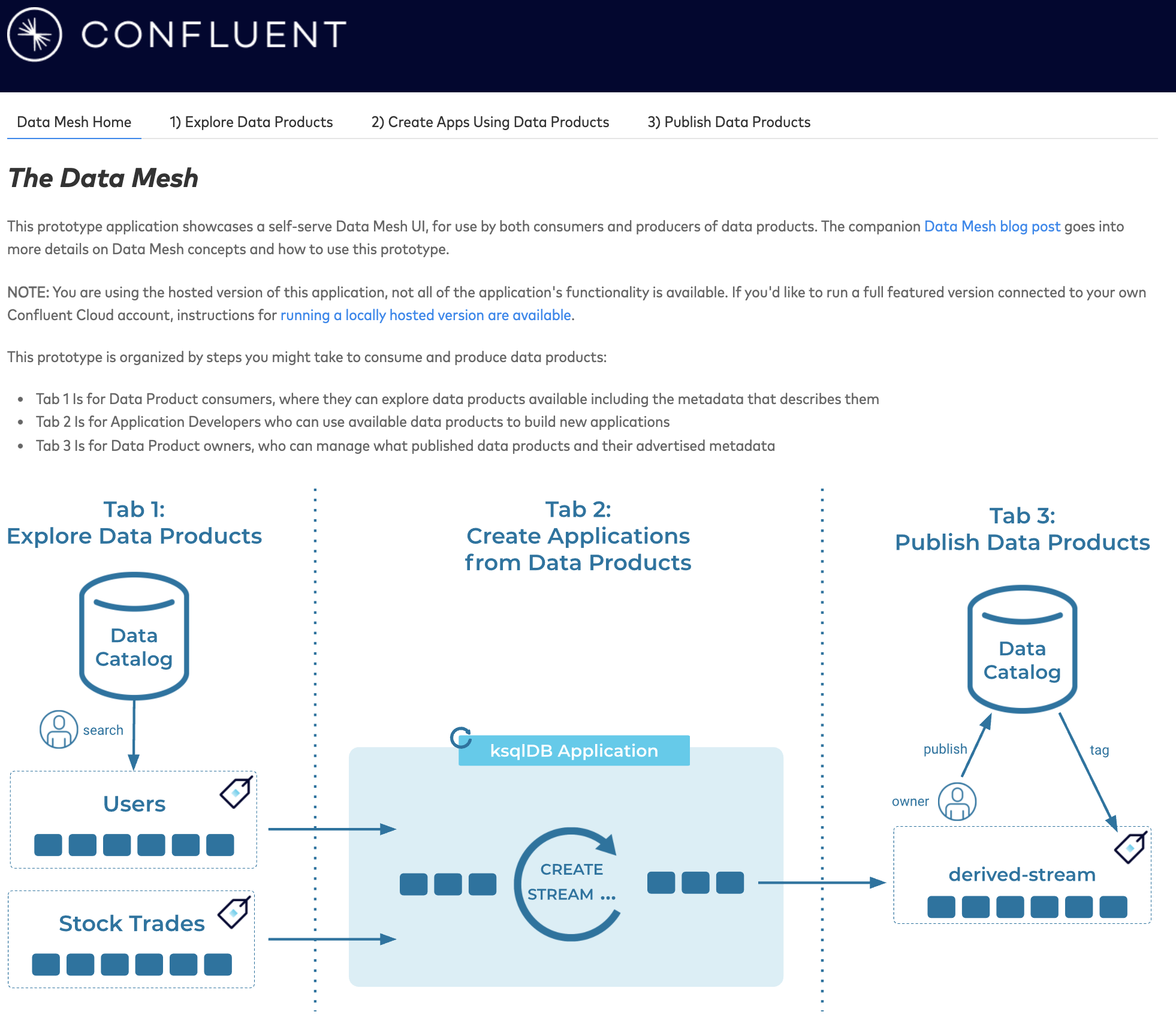

Data Mesh Confluent Data mesh is a concept that ensures data access, governance, federation, and interoperability across distributed teams and systems. it is a new approach for designing modern data architectures, based on four principles: domain ownership: responsibility over modeling and providing important data is distributed to the people closest to it. Data mesh brings selected business data to the forefront, exposing it as a first class citizen for systems and processes to couple on directly. microservices, data fabric, data marts, event streaming, and domain driven design influence the data mesh. these influences can be summed up by data mesh’s four principles—data ownership by domain. Confluent provides capabilities essential for building a comprehensive data mesh: data mesh heralds a shift from centralized data approaches to a federated, self serve paradigm, and you can leverage confluent data streaming platform as the foundation for effective data product delivery. over the years with customer implementations of data mesh. The data mesh architectural paradigm shift is all about moving analytical data away from a monolithic data warehouse or data lake into a distributed architecture—allowing data to be shared for analytical purposes in real time, right at the point of origin. the idea of data mesh was introduced by zhamak dehghani (director of emerging technologies, thoughtworks) in 2019. here, she provides an.

What Is Data Mesh Complete Tutorial Confluent provides capabilities essential for building a comprehensive data mesh: data mesh heralds a shift from centralized data approaches to a federated, self serve paradigm, and you can leverage confluent data streaming platform as the foundation for effective data product delivery. over the years with customer implementations of data mesh. The data mesh architectural paradigm shift is all about moving analytical data away from a monolithic data warehouse or data lake into a distributed architecture—allowing data to be shared for analytical purposes in real time, right at the point of origin. the idea of data mesh was introduced by zhamak dehghani (director of emerging technologies, thoughtworks) in 2019. here, she provides an. Cnfl.io data mesh 101 module 1 | follow along as tim berglund (senior director of developer experience, confluent) introduces the hyper new concept o. Zhamak and tim also discuss the next steps we need to take in order to bring data mesh to life at the industry level. to learn more about the topic, you can visit the all new confluent developer course: data mesh 101. confluent developer is a single destination with resources to begin your kafka journey. episode links.

Data Mesh Demo Cnfl.io data mesh 101 module 1 | follow along as tim berglund (senior director of developer experience, confluent) introduces the hyper new concept o. Zhamak and tim also discuss the next steps we need to take in order to bring data mesh to life at the industry level. to learn more about the topic, you can visit the all new confluent developer course: data mesh 101. confluent developer is a single destination with resources to begin your kafka journey. episode links.

Confluent On Linkedin How To Build A Data Mesh Using Event Streams

Comments are closed.