Caching Metrics Hasura Graphql Docs

Quickstart Hasura Graphql Docs Hasura enterprise edition exports prometheus metrics related to caching which can provide valuable insights into the efficiency and performance of the caching system. this can help towards monitoring and further optimization of the cache utilization. exposed metrics the graphql engine exposes the hasura cache request count prometheus metric. Hasura caching. hasura cloud and enterprise editions provide a caching layer that can be used to cache the response of a graphql query. this can help reduce the number of requests to your data sources and improve the performance of your application. you have full control over the cache lifetime and can choose to force the cache to refresh when.

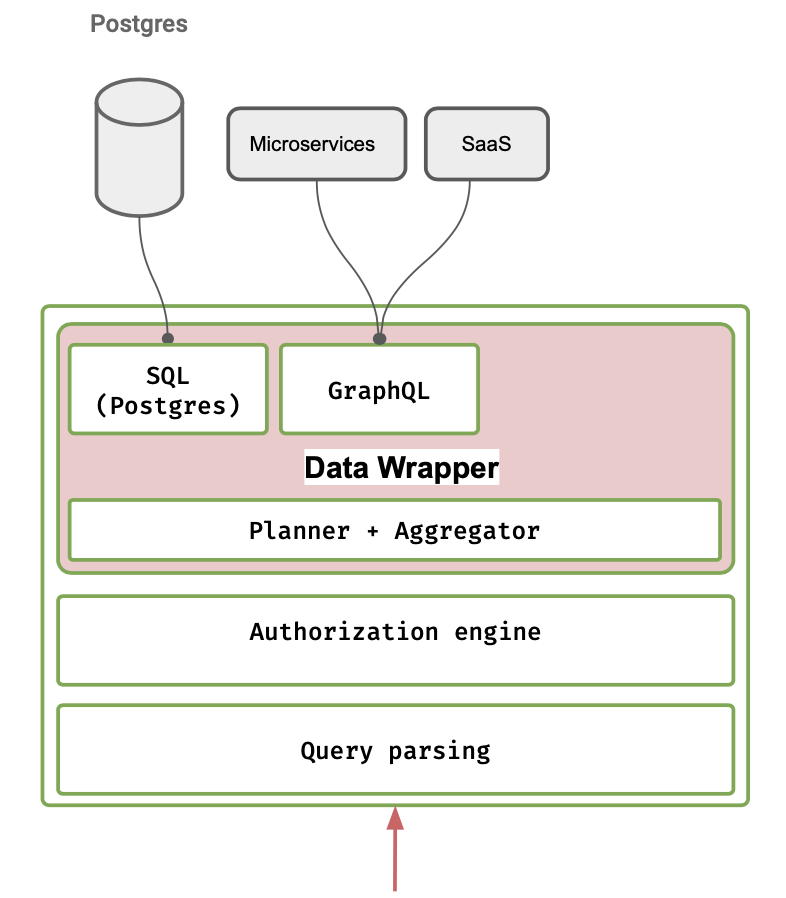

Scaling Hasura With Graphql Edge Caching For Rmrk S Nft Marketplaces Hasura cloud and enterprise edition provide support for caching query responses. this will drastically improve performance for queries which are executed frequently. this includes any graphql query including actions and remote schemas. cached responses are stored for a period of time in a lru (least recently used) cache, and removed from the. Hasura enterprise edition exports prometheus metrics related to caching which can provide valuable insights into the efficiency and performance of the caching system. this can help towards monitoring and further optimization of the cache utilization. the hasura cache request count metric can be used. Hasura's query caching mechanism improves performance by storing the results of queries. when a query with the same cache key is made, the stored response is served, reducing the load on the database. use @cached directive: apply this directive to your queries to enable caching. ttl configuration: set the time to live for cached responses. Hasura maintains an internal cache to improve this process. when a graphql query plan is created, the query string and variable values are stored in an internal cache, paired with the prepared sql.

Caching Metrics Hasura Graphql Docs Hasura's query caching mechanism improves performance by storing the results of queries. when a query with the same cache key is made, the stored response is served, reducing the load on the database. use @cached directive: apply this directive to your queries to enable caching. ttl configuration: set the time to live for cached responses. Hasura maintains an internal cache to improve this process. when a graphql query plan is created, the query string and variable values are stored in an internal cache, paired with the prepared sql. Leverage redis for caching: for self hosted hasura enterprise edition, use a redis instance to store cached responses. this can significantly improve performance and scalability. avoid over caching: be mindful of what queries you cache. over caching can lead to stale data and increased complexity in cache invalidation. Hasura caching is a powerful feature that optimizes the performance of graphql queries by storing the results and serving them for subsequent requests without re querying the database.

Blazing Fast Graphql Execution With Query Caching Postgres Prepared Leverage redis for caching: for self hosted hasura enterprise edition, use a redis instance to store cached responses. this can significantly improve performance and scalability. avoid over caching: be mindful of what queries you cache. over caching can lead to stale data and increased complexity in cache invalidation. Hasura caching is a powerful feature that optimizes the performance of graphql queries by storing the results and serving them for subsequent requests without re querying the database.

Comments are closed.