2 Maximum Likelihood For Regression Coefficients Part 1 Of 3 Youtube

2 Maximum Likelihood For Regression Coefficients Part 1 Of 3 Youtube About press copyright contact us creators advertise developers terms privacy policy & safety how works test new features nfl sunday ticket press copyright. In this video, i would like to share how to estimate regression coefficients by maximum likelihood method in excel.i hope you have a chance to clearly unders.

Estimating Logistic Regression Coefficients Maximum Likelihood Method Most of our semester in bios 6611 has focused on the ordinary least squares approach to estimation and derivation of our regression coefficients. however, we. Maximum likelihood estimator(s) 1. 0 b 0 same as in least squares case 2. 1 b 1 same as in least squares case 3. ˙ 2 ˙^2 = p i (y i y^ i)2 n 4.note that ml estimator is biased as s2 is unbiased and s2 = mse = n n 2 ^˙2. The first entries of the score vector are the th entry of the score vector is the hessian, that is, the matrix of second derivatives, can be written as a block matrix let us compute the blocks: and finally, therefore, the hessian is by the information equality, we have that but and, by the law of iterated expectations, thus, as a consequence, the asymptotic covariance matrix is. 3. ˘n(0;˙2), and is independent of x. 4. is independent across observations. a consequence of these assumptions is that the response variable y is indepen dent across observations, conditional on the predictor x, i.e., y 1 and y 2 are independent given x 1 and x 2 (exercise 1).

Linear Regression A Maximum Likelihood Approach Part 1 Youtube The first entries of the score vector are the th entry of the score vector is the hessian, that is, the matrix of second derivatives, can be written as a block matrix let us compute the blocks: and finally, therefore, the hessian is by the information equality, we have that but and, by the law of iterated expectations, thus, as a consequence, the asymptotic covariance matrix is. 3. ˘n(0;˙2), and is independent of x. 4. is independent across observations. a consequence of these assumptions is that the response variable y is indepen dent across observations, conditional on the predictor x, i.e., y 1 and y 2 are independent given x 1 and x 2 (exercise 1). This is a conditional probability density (cpd) model. linear regression can be written as a cpd in the following manner: p (y ∣ x, θ) = (y ∣ μ (x), σ 2 (x)) for linear regression we assume that μ (x) is linear and so μ (x) = β t x. we must also assume that the variance in the model is fixed (i.e. that it doesn't depend on x) and as. Maximum likelihood estimation. the likelihood function can be maximized w.r.t. the parameter(s) Θ, doing this one can arrive at estimators for parameters as well. l({xi }n i=1 , Θ) =. i=1 n f (xi ; Θ) • to do this, find solutions to (analytically or by following gradient) dl({x i }n ,Θ) i=1 = 0. dΘ.

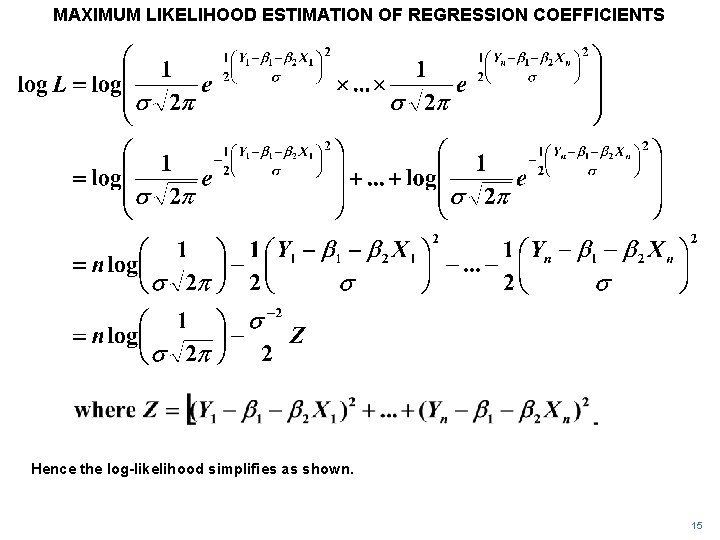

Maximum Likelihood Estimation Of Regression Coefficients Y Y This is a conditional probability density (cpd) model. linear regression can be written as a cpd in the following manner: p (y ∣ x, θ) = (y ∣ μ (x), σ 2 (x)) for linear regression we assume that μ (x) is linear and so μ (x) = β t x. we must also assume that the variance in the model is fixed (i.e. that it doesn't depend on x) and as. Maximum likelihood estimation. the likelihood function can be maximized w.r.t. the parameter(s) Θ, doing this one can arrive at estimators for parameters as well. l({xi }n i=1 , Θ) =. i=1 n f (xi ; Θ) • to do this, find solutions to (analytically or by following gradient) dl({x i }n ,Θ) i=1 = 0. dΘ.

Logistic Regression Details Pt 2 Maximum Likelihood Youtube

Comments are closed.